Practical ML

Open Source MLOps infrastructure available in the cloud or on-premise. Machine Learning APIs, based on state-of-the-art models.

Runnning on Open Source

Simple generic API

I can use simple and reliable API. REST API endpoint runs async and sync calls.

Pluggable ML services

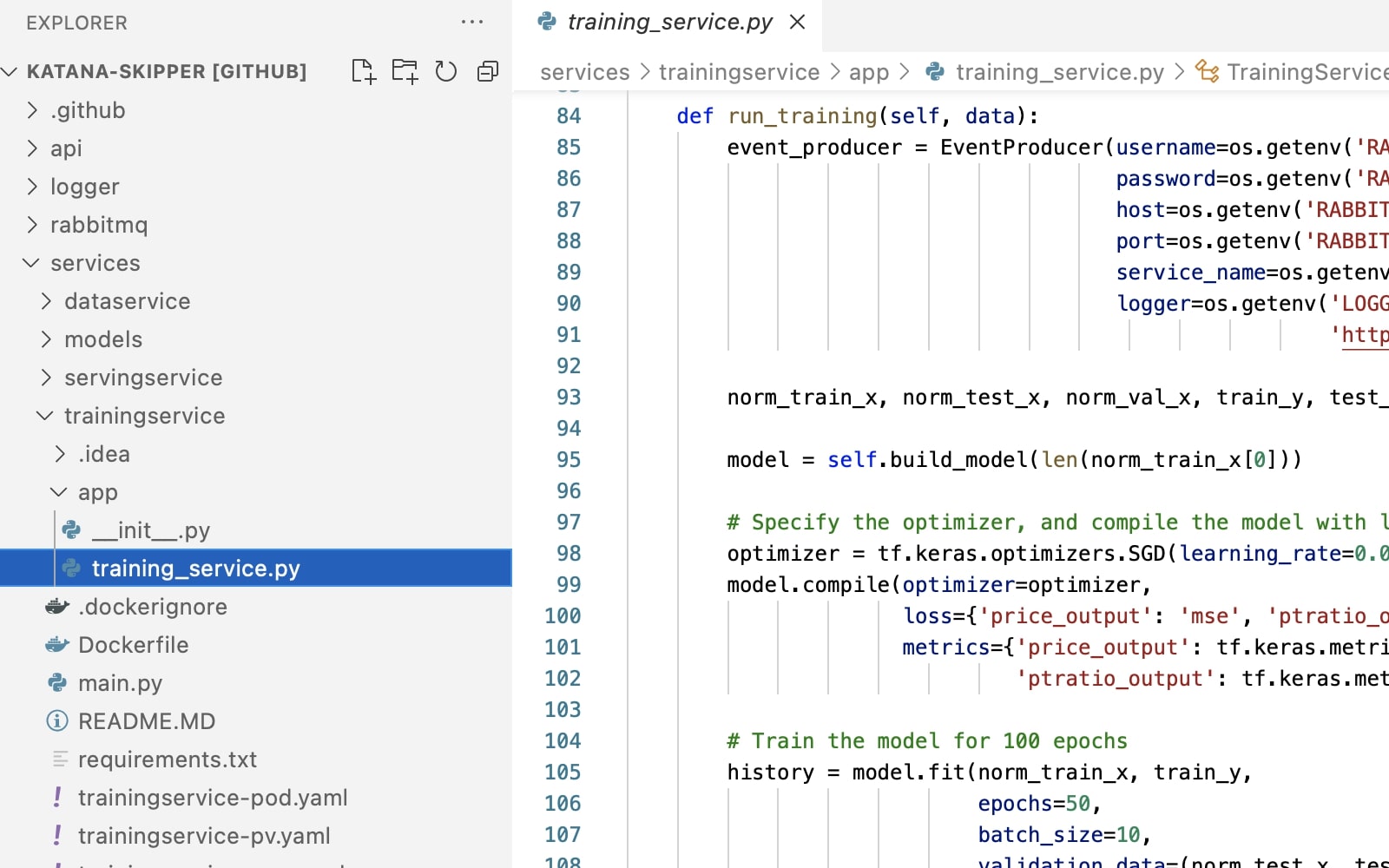

I can run any ML workload. Skipper MLOps infra runs ML services as Docker containers.

Reliable ML services architecture

I can run the system, even when part of the services are down. This is ensured by event-based communication.

System traceability

I can trace and see what happens in the system. All events are logged through a dedicated container.

Run your MLOps solution on Skipper

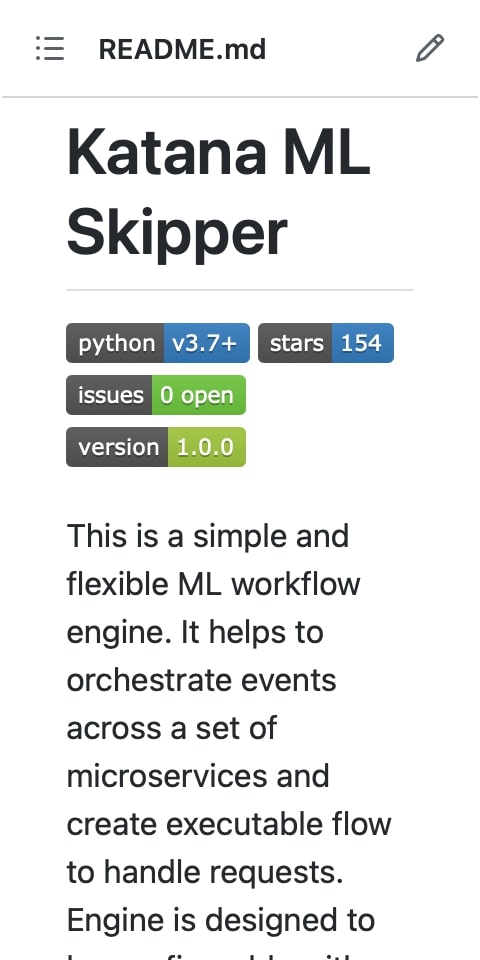

Skipper is a simple and flexible ML workflow engine.

Designed for

ML services

Many ML projects are implemented with Jupyter notebooks. It is really hard to scale and maintain such solutions when deployed to production. We recommend investing in MLOps early. Develop your ML solution with Skipper to be ready for production deployment.

Data processing

Data is processed in separate container, this makes it easier to apply future changes.

Prediction service scaling

Prediction service is configured to run in separate Kubernetes Pod for better scalability.